The official Ollama Python library provides a high-level, Pythonic way to work with local language models. It abstracts away raw HTTP requests and makes model management, chatting, and customization much easier and more readable. In this guide, you'll learn how to interact with Ollama models using Python functions—covering everything from listing models to chatting, streaming, showing model info, and managing custom models.

Prerequisites

- Ollama should be installed and running locally

- Make sure the Ollama service is running on your machine.

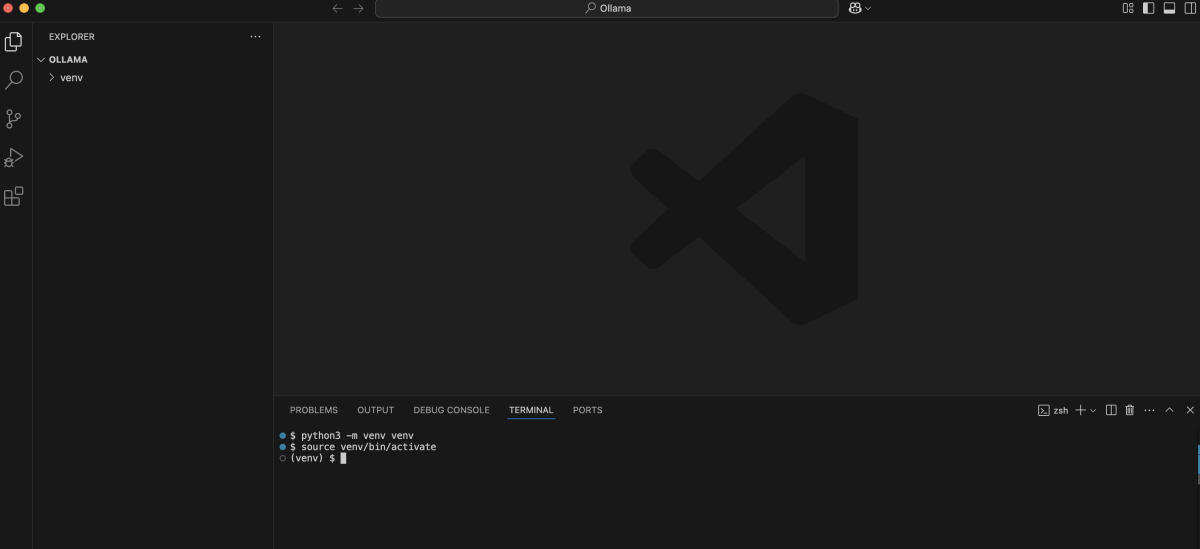

- Set up your Python environment

- Create and activate a virtual environment:

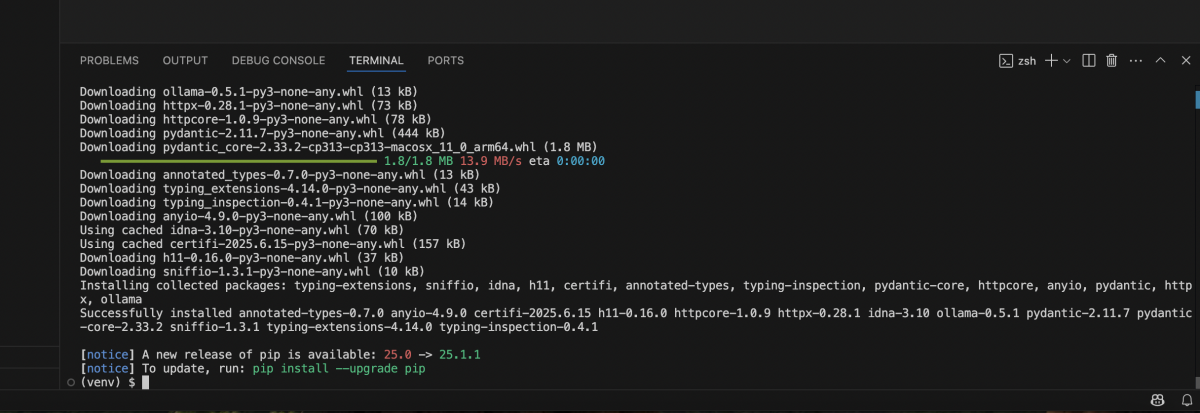

- Install the Ollama Python library

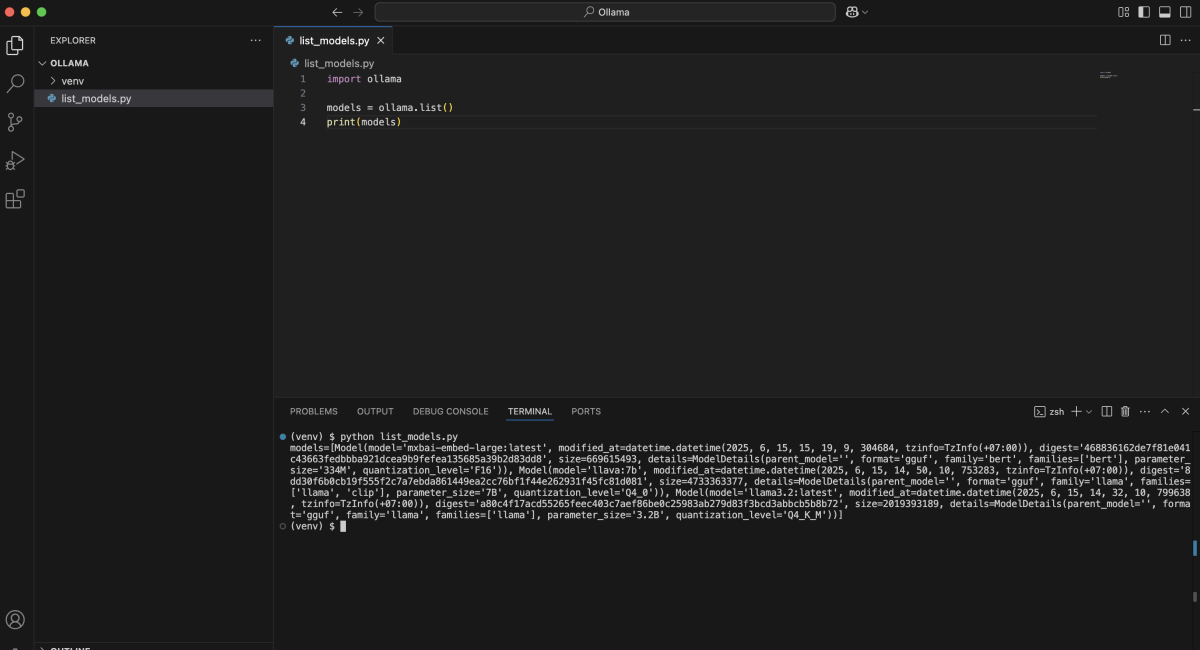

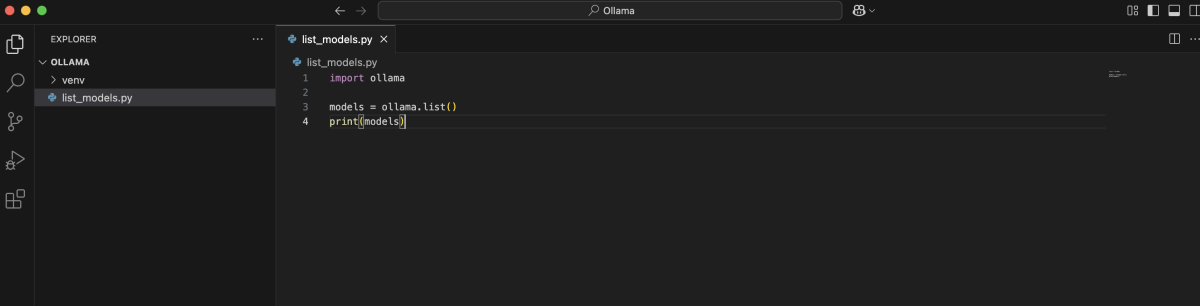

Listing Available Models

You can list all models currently available in your Ollama instance:

How to run:

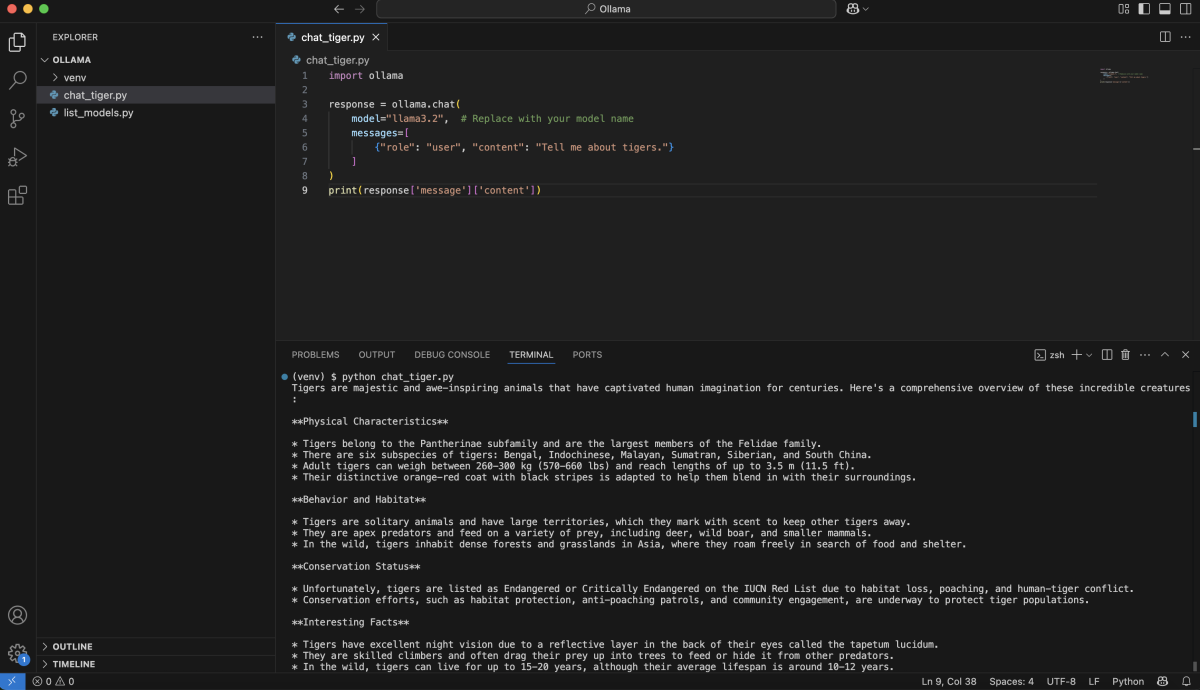

Chatting with Models

You can have multi-turn conversations with models using the chat function. This is ideal for building chatbots or assistants.

How to run:

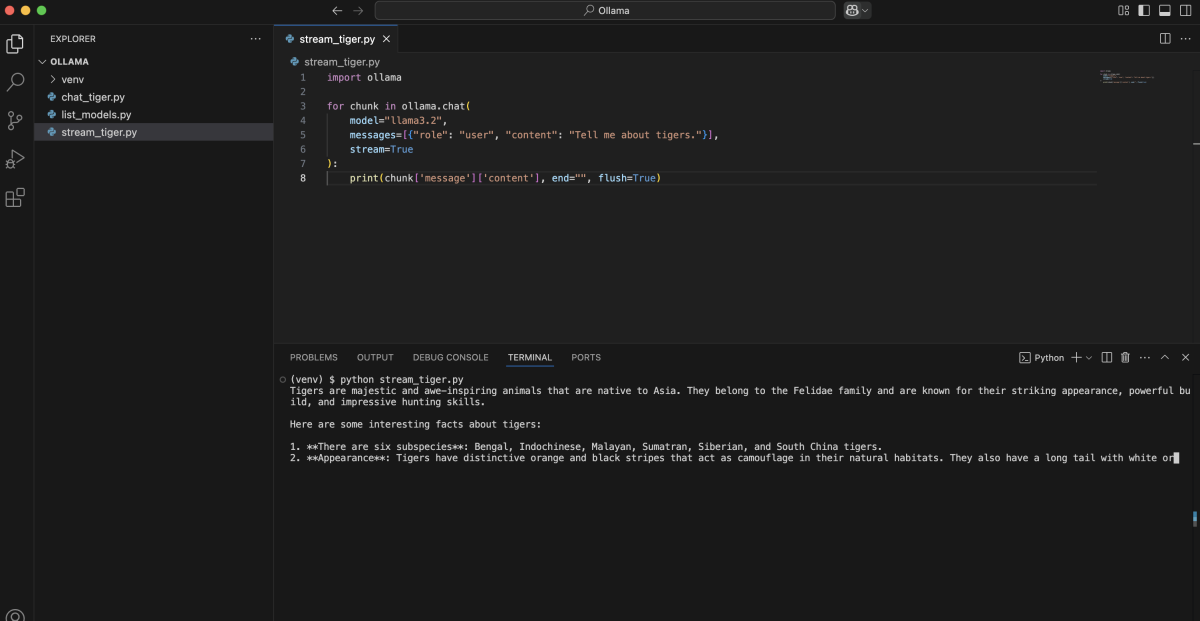

Streaming Responses

For long or real-time outputs, you can stream model responses as they are generated. This is useful for chat UIs or applications that need to display content as it arrives.

How to run:

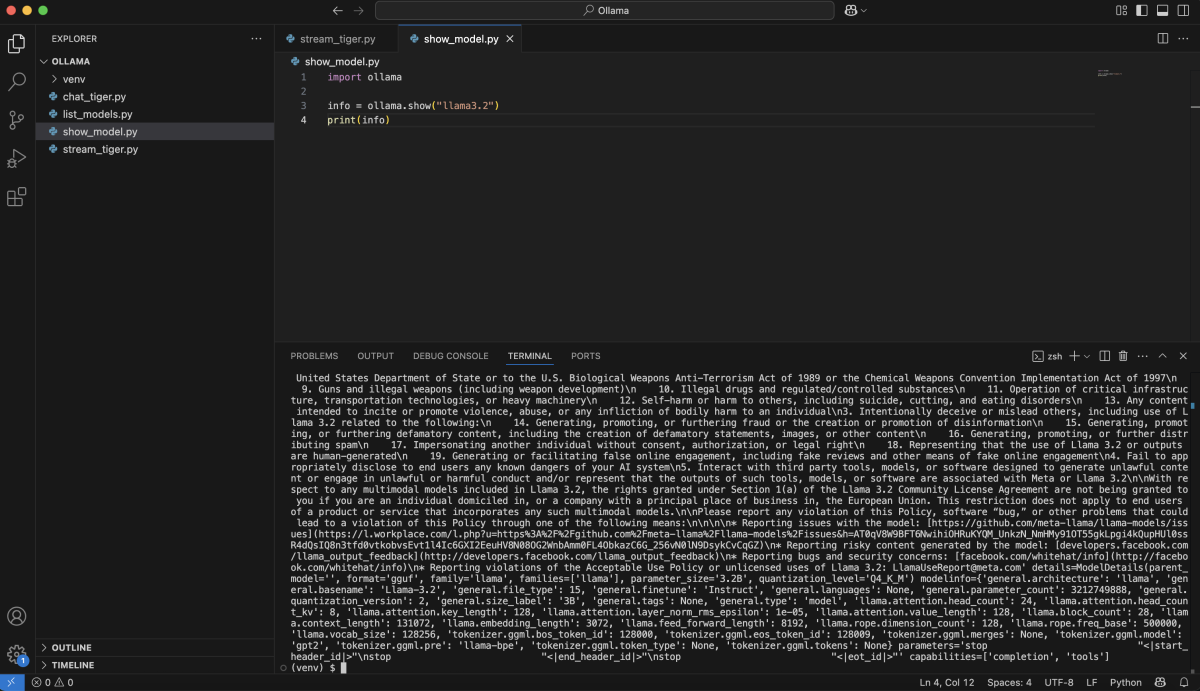

Using the show Function

You can inspect detailed information about any model (metadata, creation time, config, etc.):

How to run:

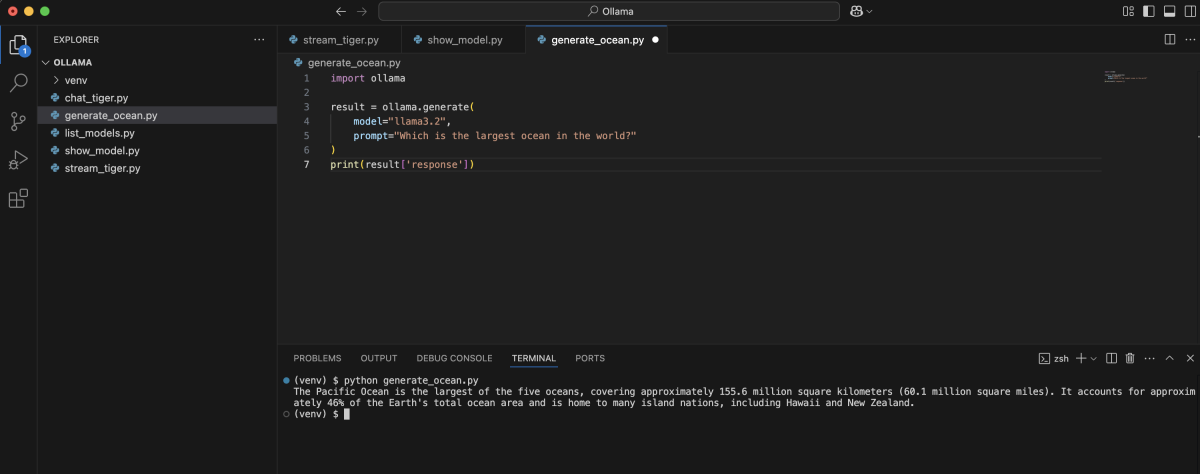

Generating Text (Single Prompt)

If you want to generate text from a single prompt (not a chat), you can use the generate function:

How to run:

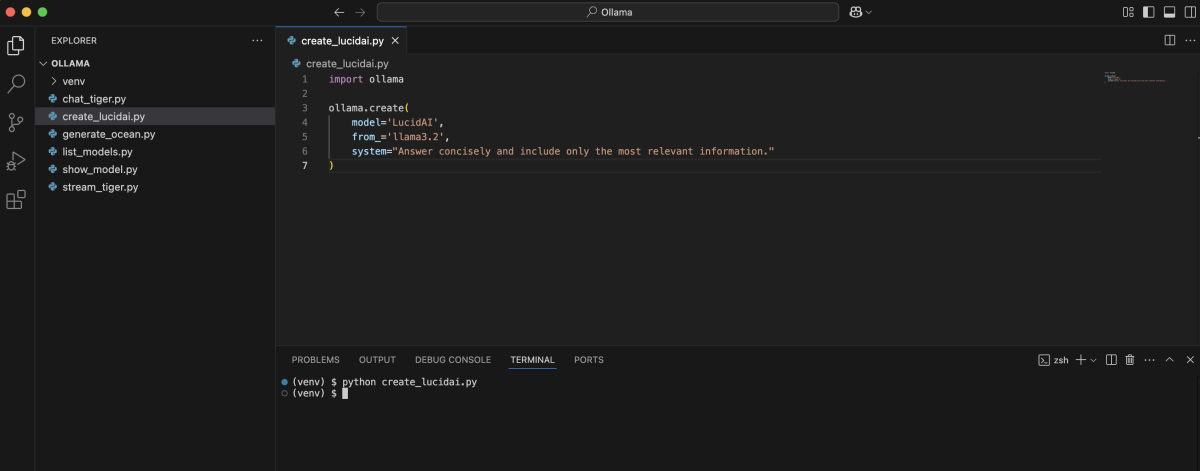

Creating and Managing Custom Models

You can create, use, and delete custom models directly from Python with the Ollama library.

Create a Custom Model

How to run:

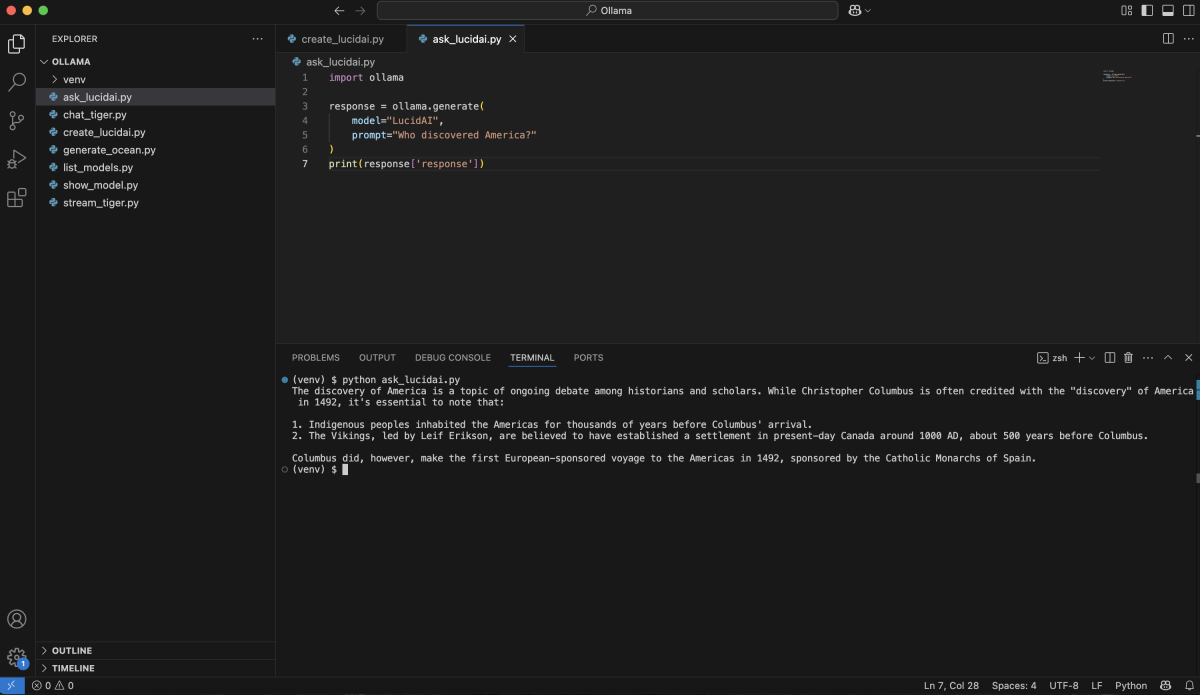

Use the Custom Model

How to run:

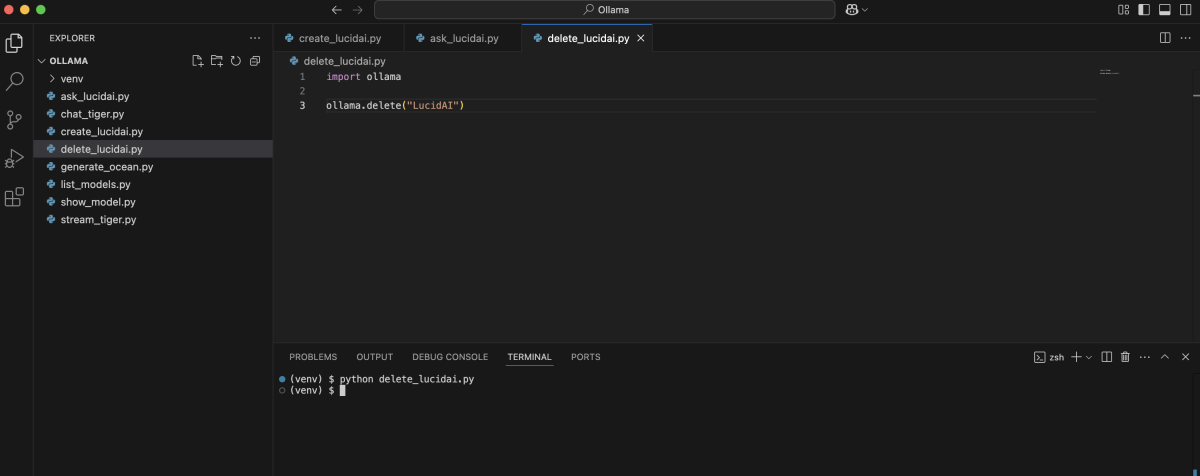

Delete a Model

How to run:

In the next articles, you'll see how to build practical LLM-powered applications using these techniques!