Ollama provides a powerful set of command line tools to help you manage, use, and experiment with local AI models right on your machine. Here are the most common and useful Ollama CLI commands for your day-to-day workflow.

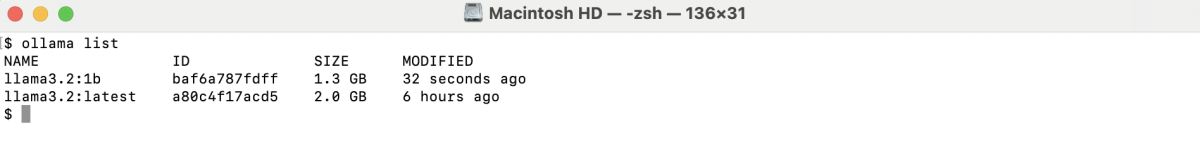

Step 1 : List Installed Models

To view all models installed locally, use:

This will display each model's name, tag, size, and last modified date.

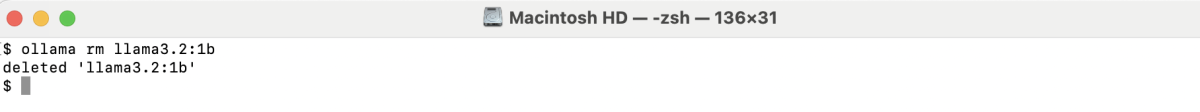

Step 2 : Remove an Unused Model

To delete a model you no longer need:

For example, to remove the llama3.2:1b model:

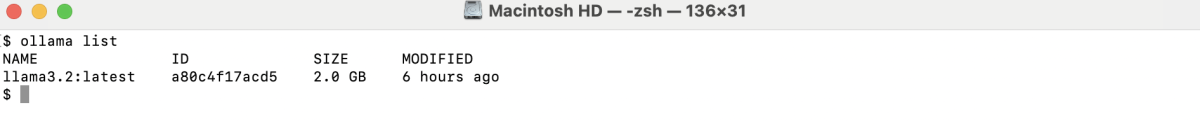

After removing, you can run ollama list again to confirm the model is gone.

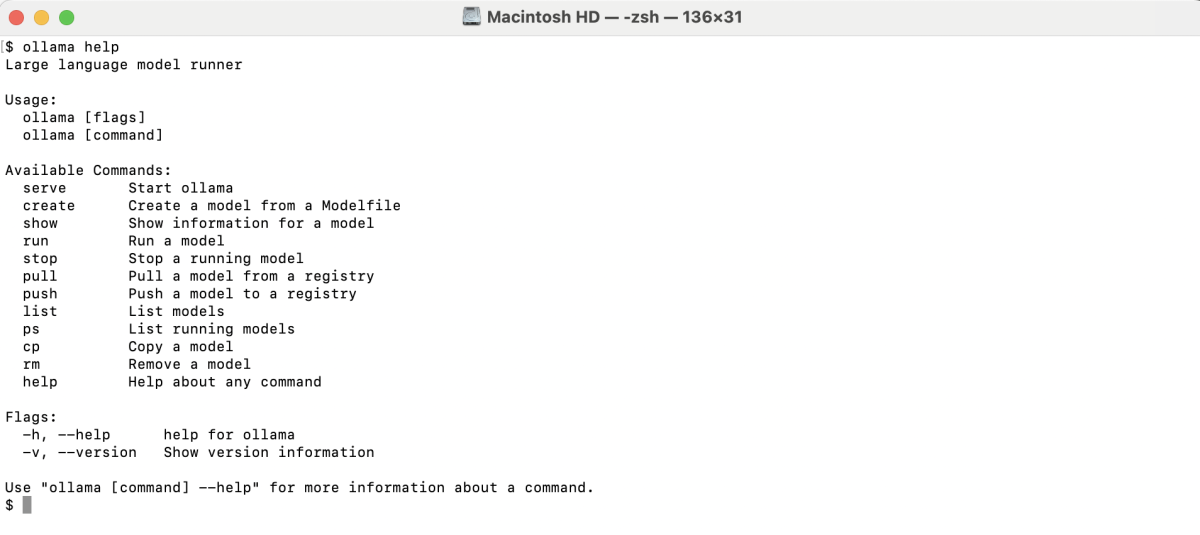

Step 3 : Get Help and See Available Commands

If you want to see all available commands or need help with the Ollama CLI, run:

You'll see a list of commands like: serve, create, show, run, stop, pull, push, list, ps, and rm.

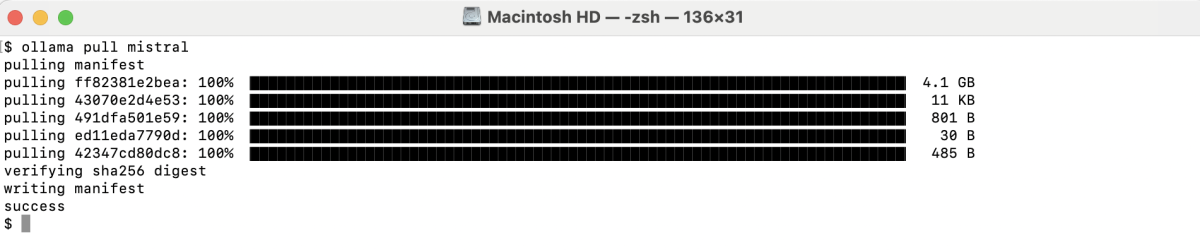

Step 4 : Download a New Model

To pull a new model onto your machine (for example, mistral):

You can find the latest model names on the official Ollama model library.

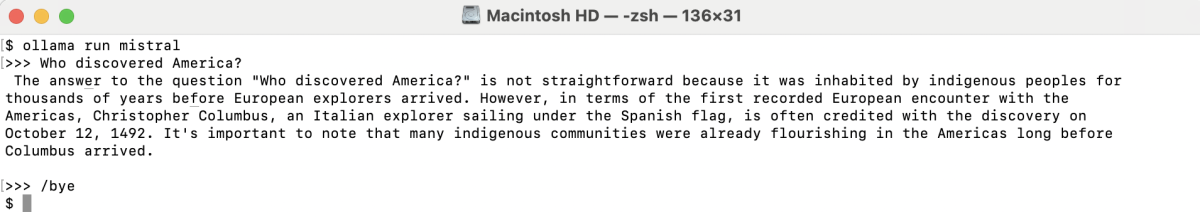

Step 5 : Run a Model and Start a Chat Session

To start an interactive chat session with a model in your terminal:

Type your questions or prompts and Ollama will generate responses directly in the terminal.

Step 6 : View Model Details

To see model configuration and metadata:

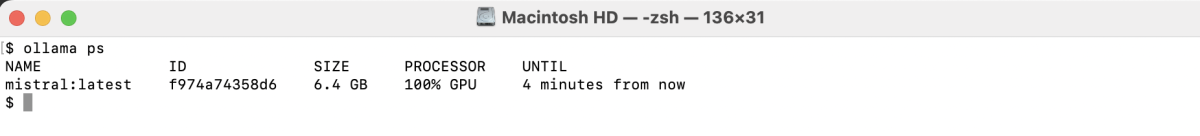

Step 7 : List Running Models

To see which models are currently running, use:

This will display all active Ollama model processes.

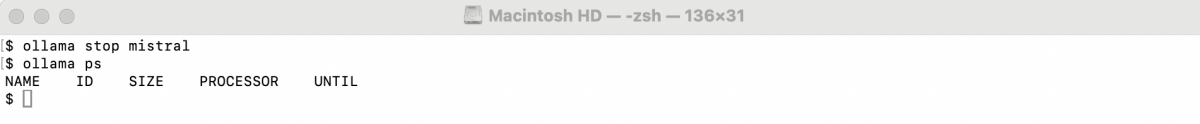

Step 8 : Stop a Running Model

To stop a specific running model, use:

For example, to stop the mistral model:

These basic CLI commands are all you need to get started with managing and interacting with Ollama models. Try each one out to get comfortable working with local AI models on your own machine.