In this section, you'll learn how to build a hands-on function calling workflow with Ollama, allowing your LLM to interact with external Python functions/tools. We'll walk through a practical scenario: categorizing a list of electronics items, fetching price and technical specifications for each item, and finally generating a recommendation for a random category. Below, you'll find the full, runnable code and a detailed explanation of each step.

Why Function Calling Matters for LLMs

Large language models (LLMs) are powerful but limited by their training data. This means they can't access up-to-date or external information, and can even "hallucinate" facts. To address this, function calling lets an LLM use external tools or APIs to fetch real-world data and perform actions it couldn't do by itself. In this example, we empower the model to:

- Categorize a list of electronics items.

- Fetch price and specifications data for each item via tool calling.

- Fetch a recommendation for a random electronics category.

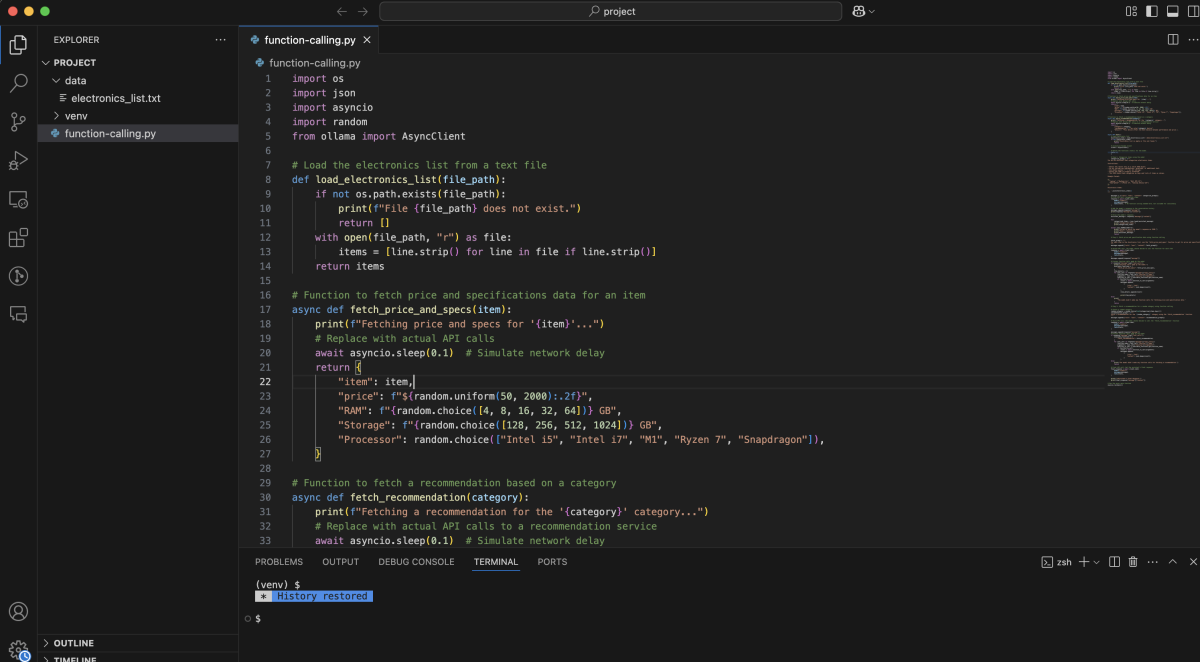

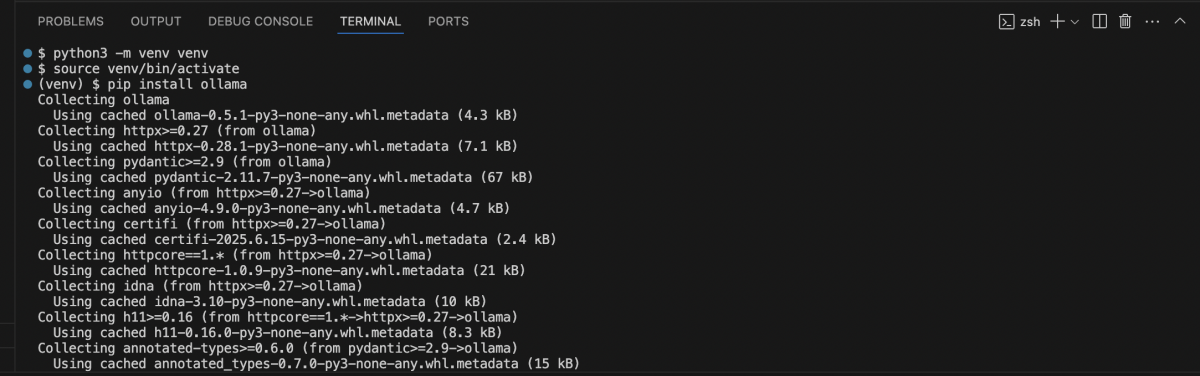

Environment Setup

Before writing any code, make sure you have the required Python environment and packages installed.

Setting Up: Defining Tools and Functions

We'll define three main Python functions:

load_electronics_list: Loads items from a text file.fetch_price_and_specs: Returns fake price/specification data (you could connect to a real API).fetch_recommendation: Returns a fake recommendation based on a category (also can be replaced with a real API).

We also define tool metadata so the LLM knows how to call these functions.

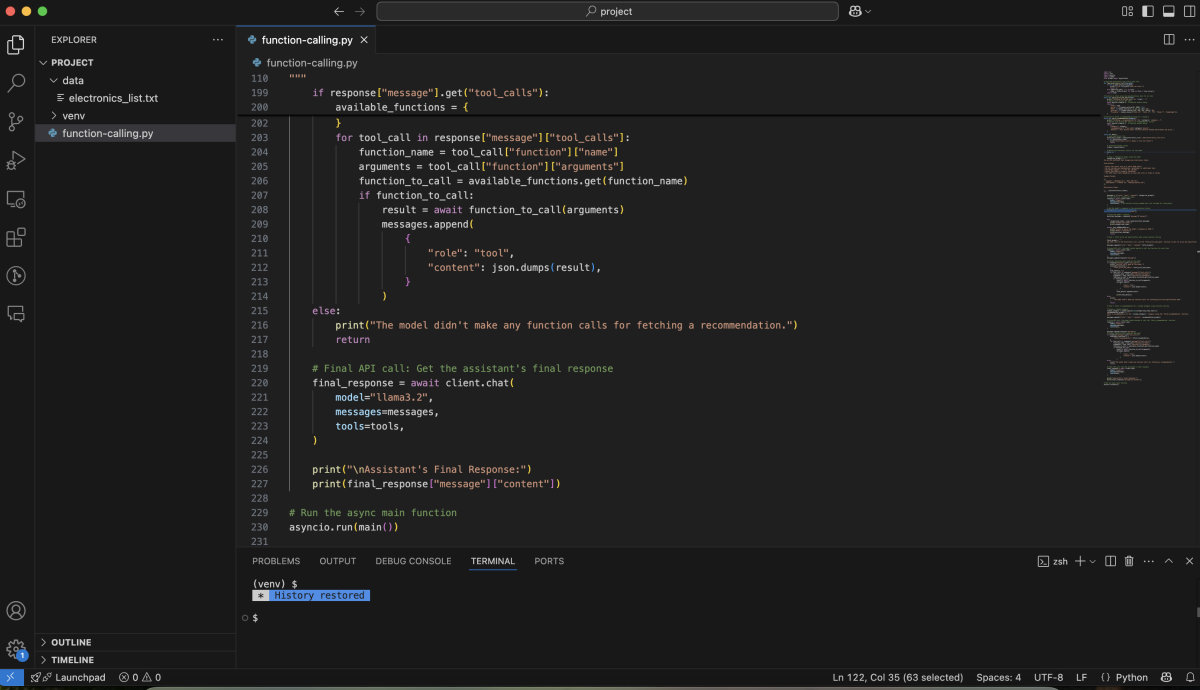

Full Pipeline: Categorize, Call Tools, and Process Results

The main function orchestrates the flow:

- Loads the electronics list.

- Defines the tool metadata for the LLM.

- Sends a prompt to categorize items.

- Instructs the model to call the price/specs function for each item.

- Has the model call the recommendation function for a random category.

- Maintains conversation history for multi-turn interactions.

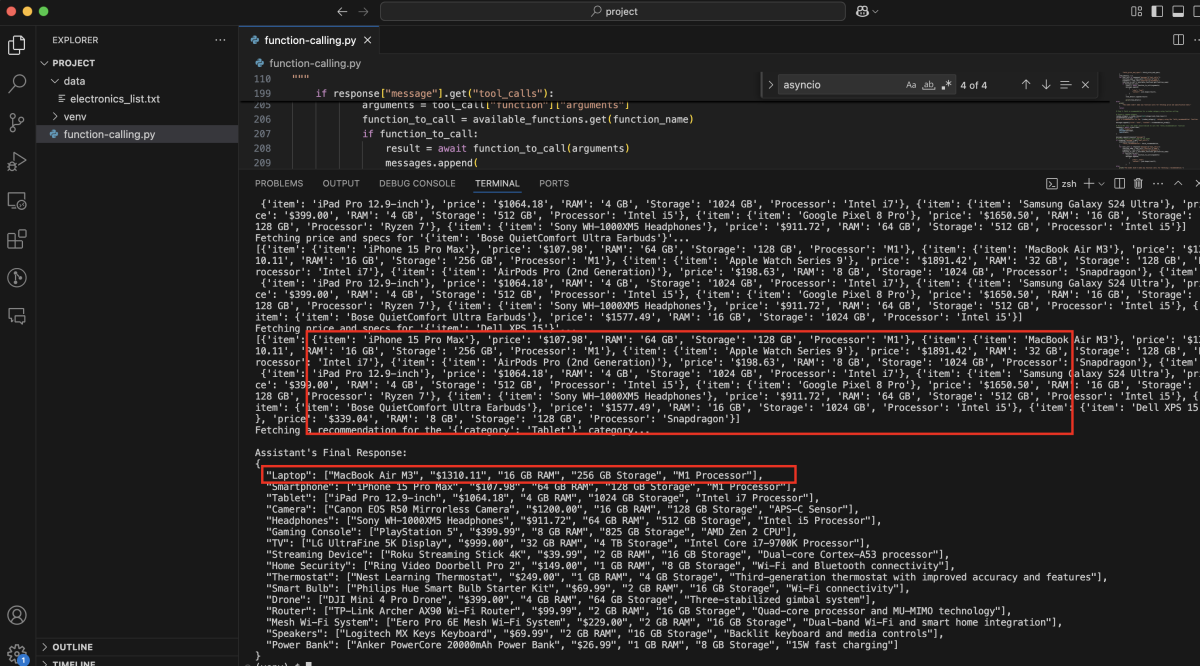

Run the Script

The result will look like this:

This example shows how function calling can transform an LLM into a powerful agent that interacts with real-world data. With just a few simple tools, you can enable your model to categorize, fetch information, and give contextual recommendations — all running locally with Ollama.