Ollama provides a powerful REST API that allows you to interact with local language models programmatically from any language, including Python. In this guide, you'll learn how to use Python to call the Ollama REST API for text generation and chat, including how to process streaming responses.

Why Use the REST API?

While Ollama's CLI and third-party GUIs are great for quick experimentation, building real applications often requires backend integration. The REST API lets you:

- Send prompts and receive completions from local models

- Stream long responses for real-time interaction

- Integrate with your own Python code, web apps, or data pipelines

Prerequisites

- Ollama is installed and running locally

- Make sure you have Ollama running (ollama serve or by launching the desktop app).

- Python environment set up

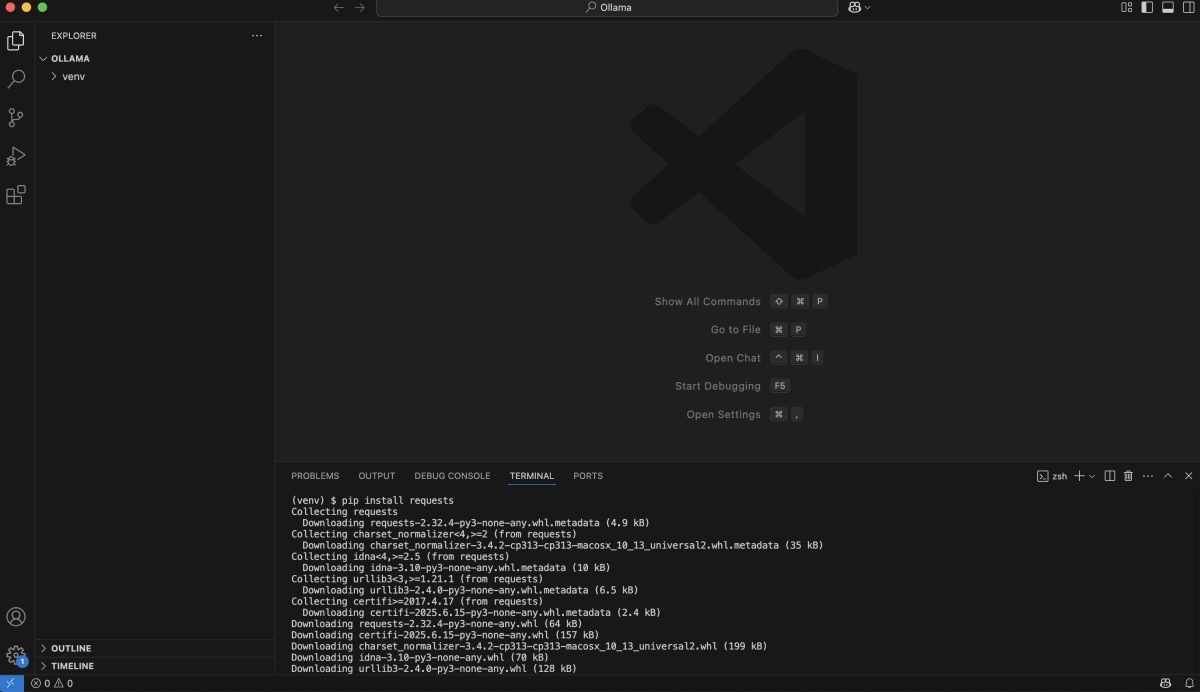

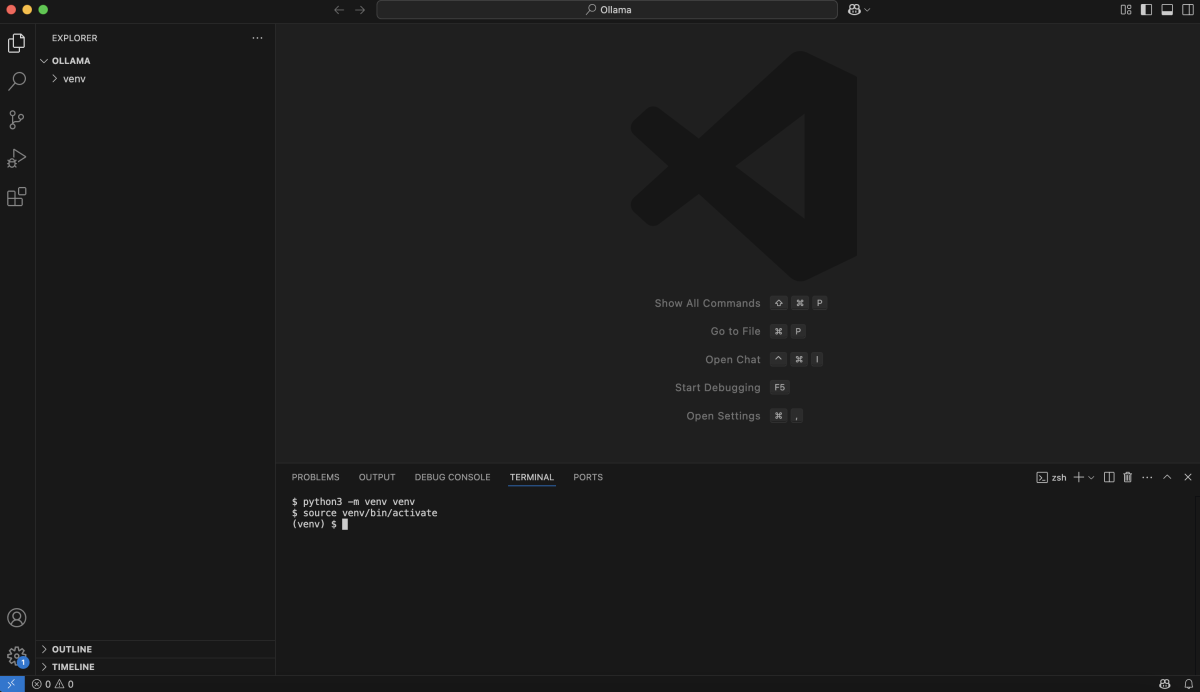

- Create and activate a virtual environment:

- Install dependencies : You'll need the requests library:

Making Basic API Calls with Python

You can use Python's requests library to interact with the REST API. The main endpoint for text generation is:

Example: Generate a Description about the Richness of the Ocean

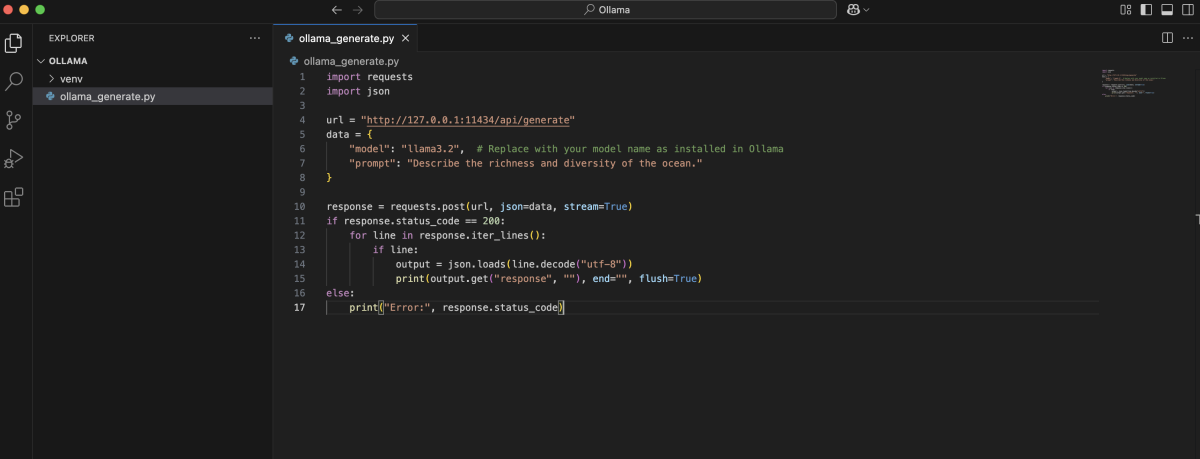

Create a file (for example, ollama_generate.py) with the following code:

Explanation

- The JSON payload specifies which model to use and what prompt to send.

- The stream=True parameter enables streaming output for longer responses.

- The code reads each line of the streamed response and prints the generated text as it arrives.

- Each line in the response is a JSON object containing a "response" field with generated text.

- If the response code is not 200, an error message is printed.

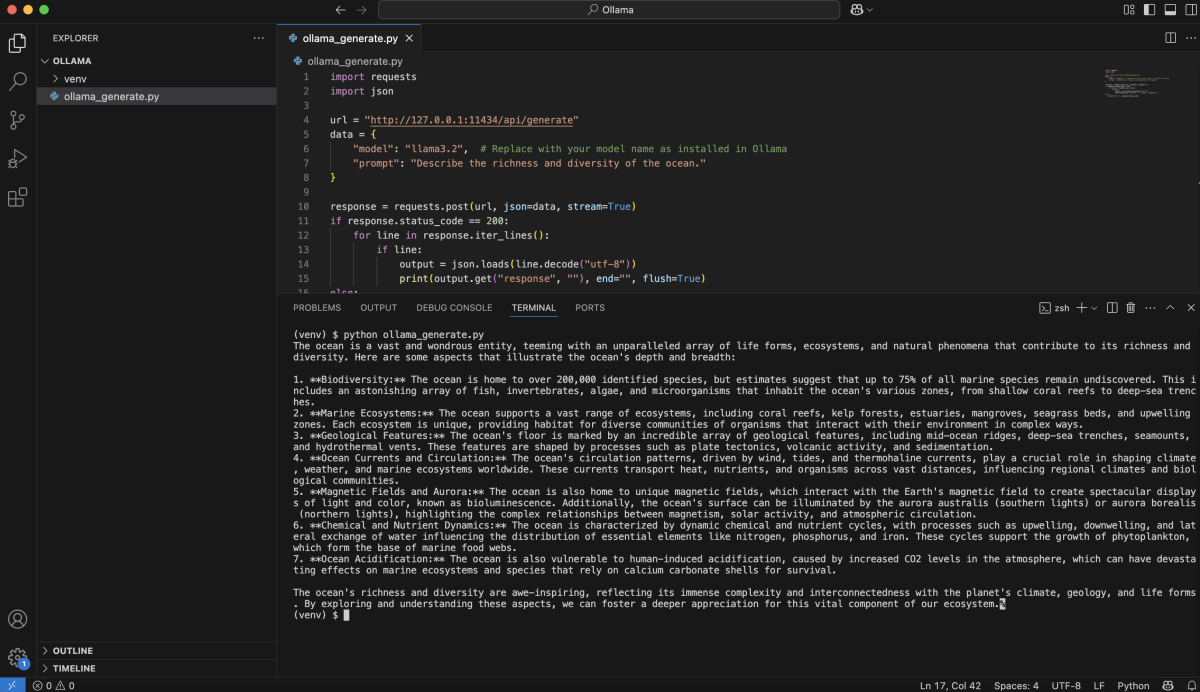

Running Your Python Script

After saving your file (e.g., as ollama_generate.py), run it in your terminal:

You should see the model's output about the richness and diversity of the ocean streamed live in your console.

Customizing Your Requests

You can customize the payload to adjust model behavior using parameters such as system instructions and options.

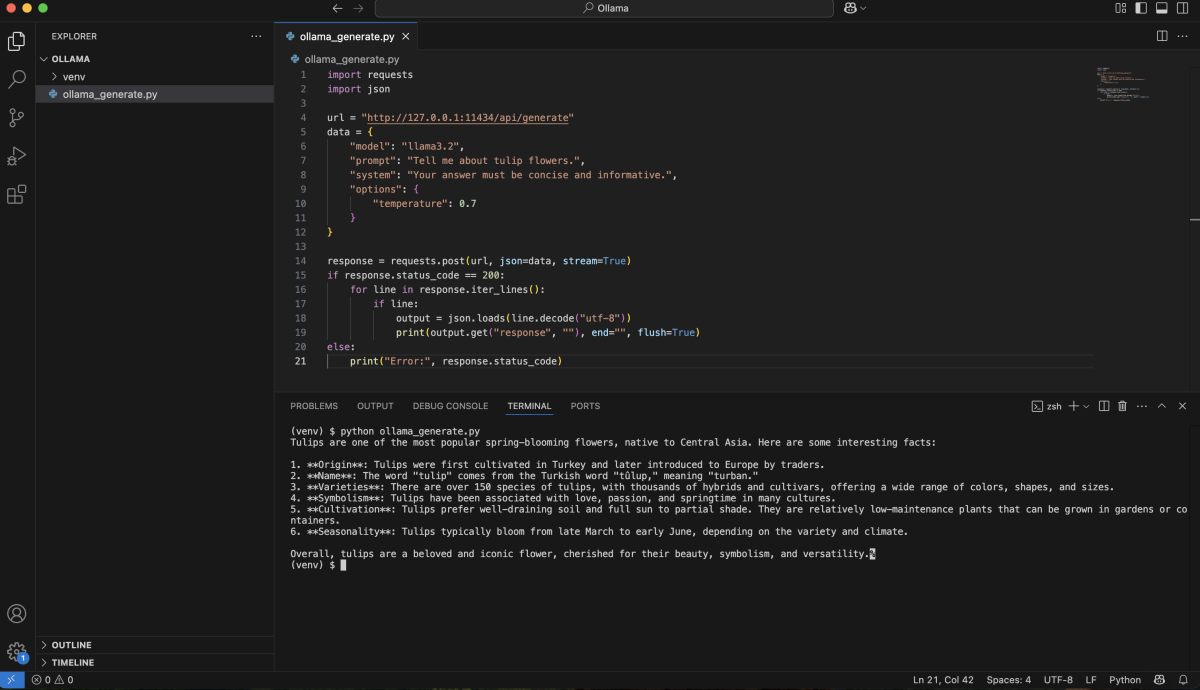

Example: Ask about tulip flowers, require a concise and informative answer, and set temperature in options

Explanation:

- The "system" field instructs the model to give a concise and informative answer.

- The "prompt" asks specifically about tulip flowers.

- The "options" dictionary allows you to set generation parameters, such as "temperature", for more creative or focused responses.

In the next article, you'll learn how to use the official Ollama Python library for even more convenient and Pythonic model interaction.