In this article, we'll build a complete Voice-Enabled RAG (Retrieval-Augmented Generation) system using a sample document, pca_tutorial.pdf. The pipeline is similar to classic RAG demos, but now with a new component—voice audio response! We'll use Ollama with LLM/embeddings, ChromaDB for vector storage, LangChain for orchestration, and ElevenLabs for text-to-speech audio output.

Key steps:

- Load and split

pca_tutorial.pdf - Generate embeddings and store in ChromaDB

- Retrieve and answer questions with Ollama LLM

- Convert the LLM answer to speech using ElevenLabs

Getting the ElevenLabs API Key

To enable voice output, you'll need an ElevenLabs API key (they offer free credit).

Sign up at https://elevenlabs.io, then:

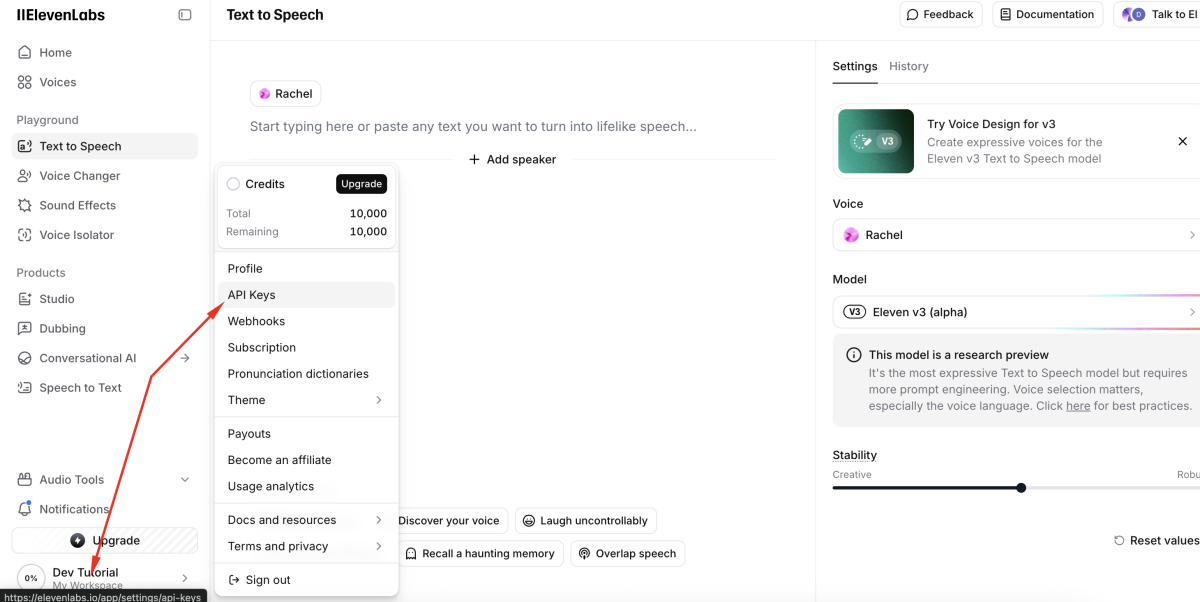

- Go to your dashboard, click on "Your profile", and select "API Key".

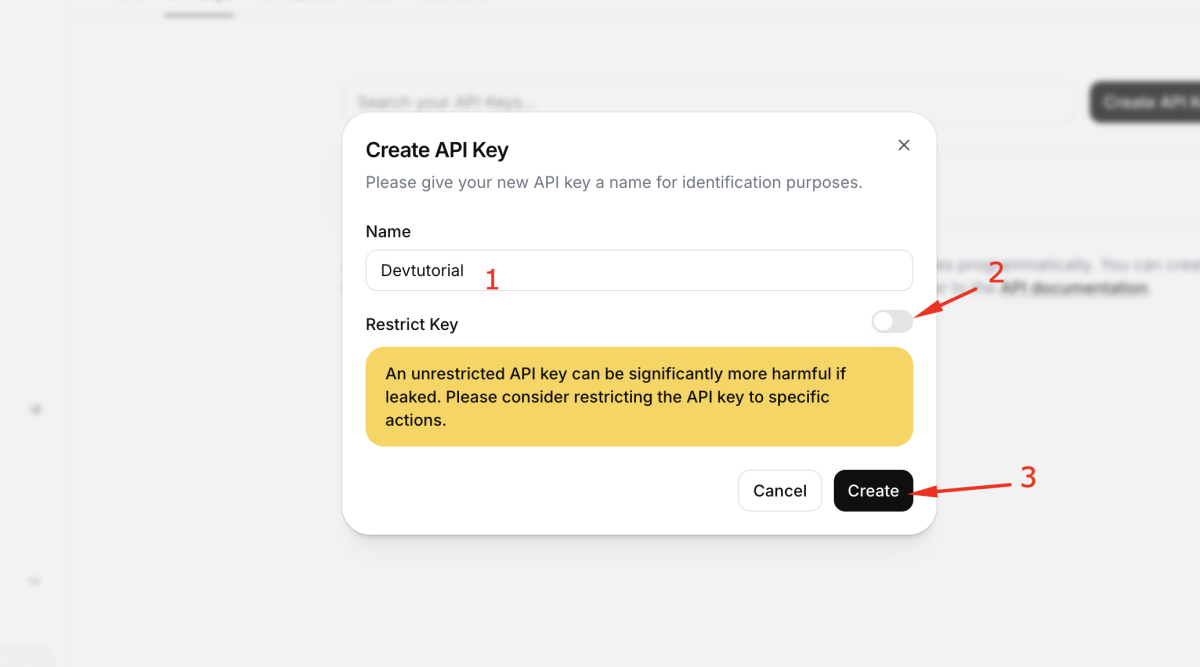

- Create a new key, name it, and copy it securely

You'll store this API key in a .env file for your project:

The Pipeline: Loading, Splitting, Embedding, and Retrieval with pca_tutorial

Let's walk through the main pipeline using your pca_tutorial.pdf.

Load and Split pca_tutorial.pdf

Use UnstructuredPDFLoader from langchain_community to load pca_tutorial.pdf, then split the document into overlapping chunks for embedding.

Embed Chunks into Chroma Vector Database

Use Ollama's embedding model to convert chunks into vectors and store them in a ChromaDB collection.

Setup the Retriever with Multi-Query

Use MultiQueryRetriever for robust question rephrasing and retrieval, boosting RAG performance.

Ask Questions with RAG Chain

Build a RAG chain with a context-injection prompt, and get high-quality answers from your LLM using the retrieved context.

Generate and Play Voice Output with ElevenLabs

Take the LLM's answer, synthesize it with ElevenLabs, and play it directly.

You could also save the audio stream to a file for future playback.

Full Example Code

Below is the complete code for building your Voice-Enabled RAG System using pca_tutorial.pdf with Ollama, ChromaDB, LangChain, and ElevenLabs.

With just a few tools and APIs, we've built a complete voice-enabled RAG system. This setup bridges text and speech, making AI interactions more dynamic and intuitive.