In this article, we'll build a practical, local LLM-powered application: an electronic devices list organizer that automatically categorizes items using Ollama and the Llama 3.2 model. You'll see how to read raw data, design a prompt, interact with the model, and output a neatly organized result—completely offline.

Overview

Suppose you have a simple text file containing an uncategorized list of electronic devices. Our goal is to use Ollama's local LLM capabilities to:

- Read the device list from a file

- Prompt the LLM to sort and categorize the items (e.g., computers, smartphones, home appliances, audio devices, etc.)

- Output the categorized, alphabetized list both to the terminal and a new file

This project demonstrates how local LLMs can help automate everyday tasks, and how easy it is to swap models or prompts for different results.

Project Structure

device_list.txt: Your raw electronic device items (one per line)categorized_device_list.txt: The output, neatly categorizedcategorizer.py: The main Python script

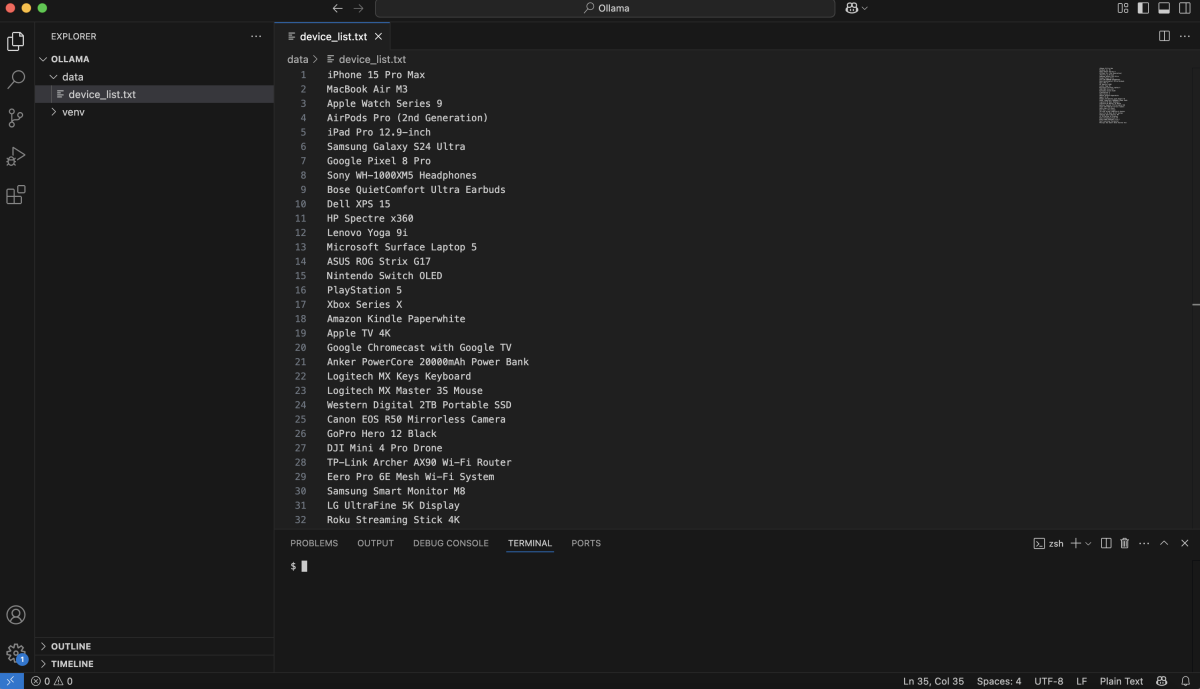

Example Input (data/device_list.txt)

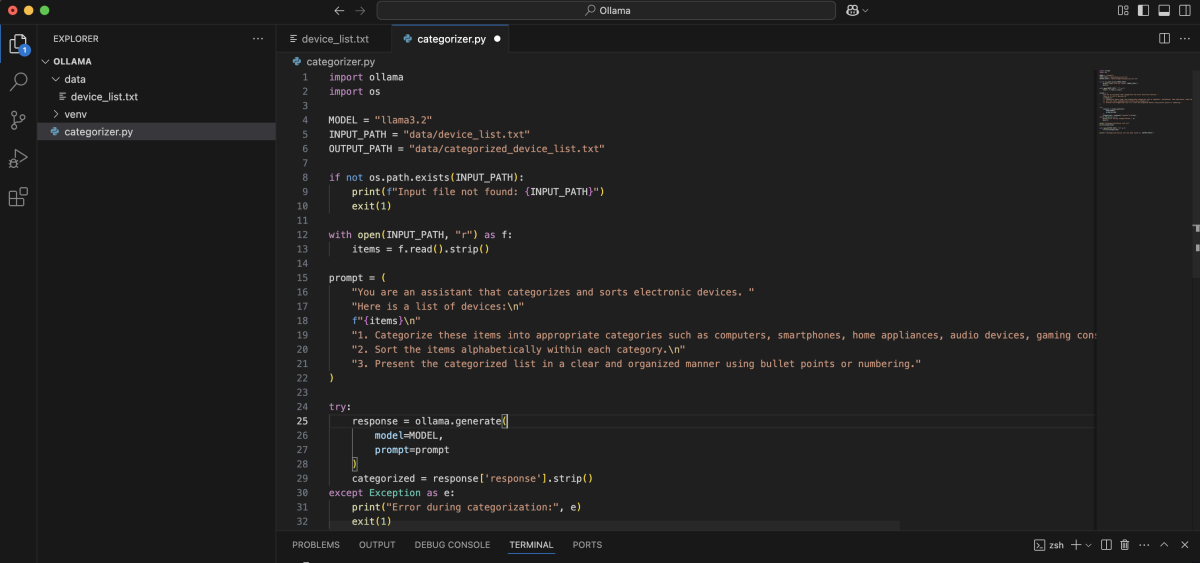

The Categorizer Python Script

Create categorizer.py as follows:

Running the Application

Activate your environment and run:

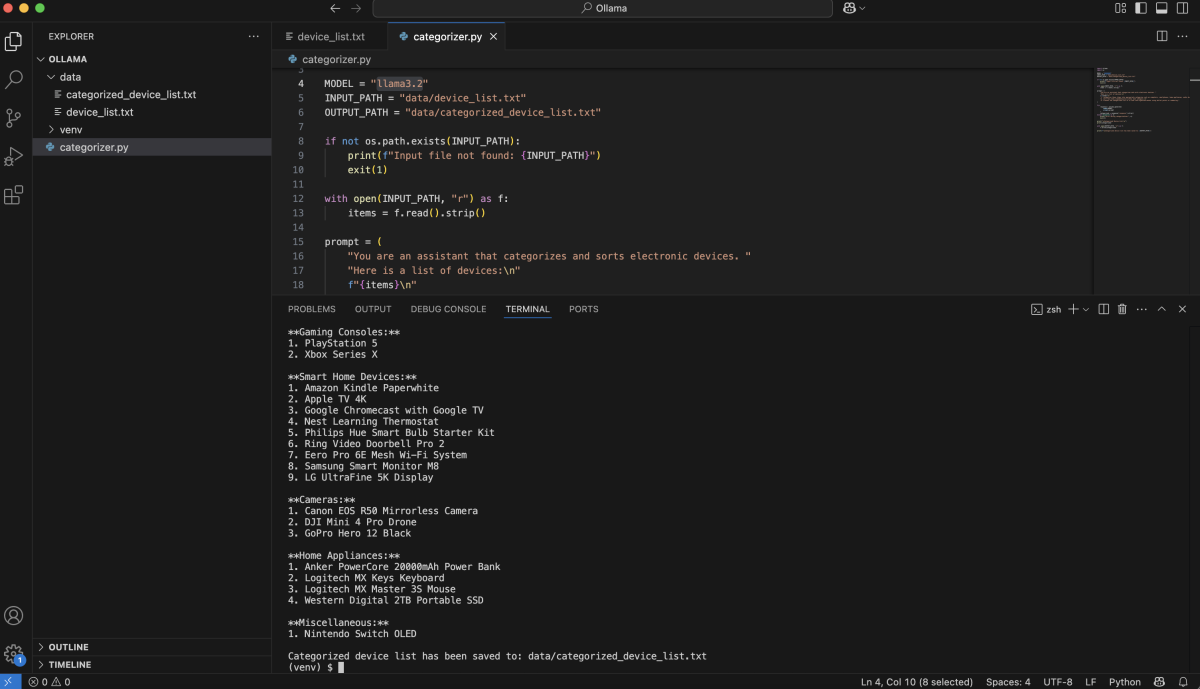

You'll see both the terminal output and a new file data/categorized_device_list.txt containing the neatly organized device list.

Sample Output

With just a few lines of code and a local LLM, you've automated a real-world task—no cloud, no API keys, and full privacy. Experiment with your own data and ideas!